How to Conduct a Students Perception Survey for Better Learning Outcomes

Your students perception survey data might be the most underused resource in education right now. Here's what research reveals: students' perceptions of their current learning environment were a stronger predictor of learning outcomes at university than prior achievement at school. Yet most schools collect feedback, file it away, then wonder why problems keep repeating.

Here's a pain point that keeps administrators up at night: student retention rates keep dropping, but nobody knows why. Exit interviews reveal generic complaints like "didn't feel connected" or "classes weren't engaging." Meanwhile, 71% of educators believe personalized learning significantly enhances student engagement and achievement but they're not tapping into the data that could make this possible.

But here's the thing that sets peoples' minds back to reality: traditional surveys are about as exciting as watching paint dry. Students zone out. Teachers get frustrated. And you're left with data that tells you nothing useful.

A well-designed students perception survey system can catch these disconnection warning signs early. Instead of waiting for students to transfer or drop out, you get real-time insights about what's actually happening in classrooms. Students tell you exactly what's working and what isn't - before it's too late to fix it.

Time to flip the script.

Why Students Perception Survey Results are More Vital Than Your GPA Rankings

Picture this: Sarah sits in her chemistry class, totally confused by the teaching method. But she never speaks up. Meanwhile, the teacher thinks everything's going smoothly because nobody complains.

That disconnect? It happens every single day in classrooms across America.

Students perception survey tools bridge that gap. They give you the real story and not the sugar-coated version that makes everyone feel good at faculty meetings.

When you dig into actual student feedback, patterns emerge. Maybe your math department needs a communication overhaul. Perhaps your online learning platform makes students want to throw their laptops out the window. You won't know unless you ask.

The Problem With Old-School Feedback Collection

Traditional surveys are broken. Period.

Think about the last time you filled out a boring questionnaire. Did you rush through it? Give generic answers? That's exactly what your students do.

Here's what goes wrong:

- Questions sound like they were written by robots

- Students don't trust that anyone actually reads their responses

- The survey format feels more like homework than helpful feedback

- Results take forever to process and analyze

This creates a feedback loop of disappointment. Schools get useless data. Students feel ignored. Teachers get defensive about criticism they can't understand or act on.

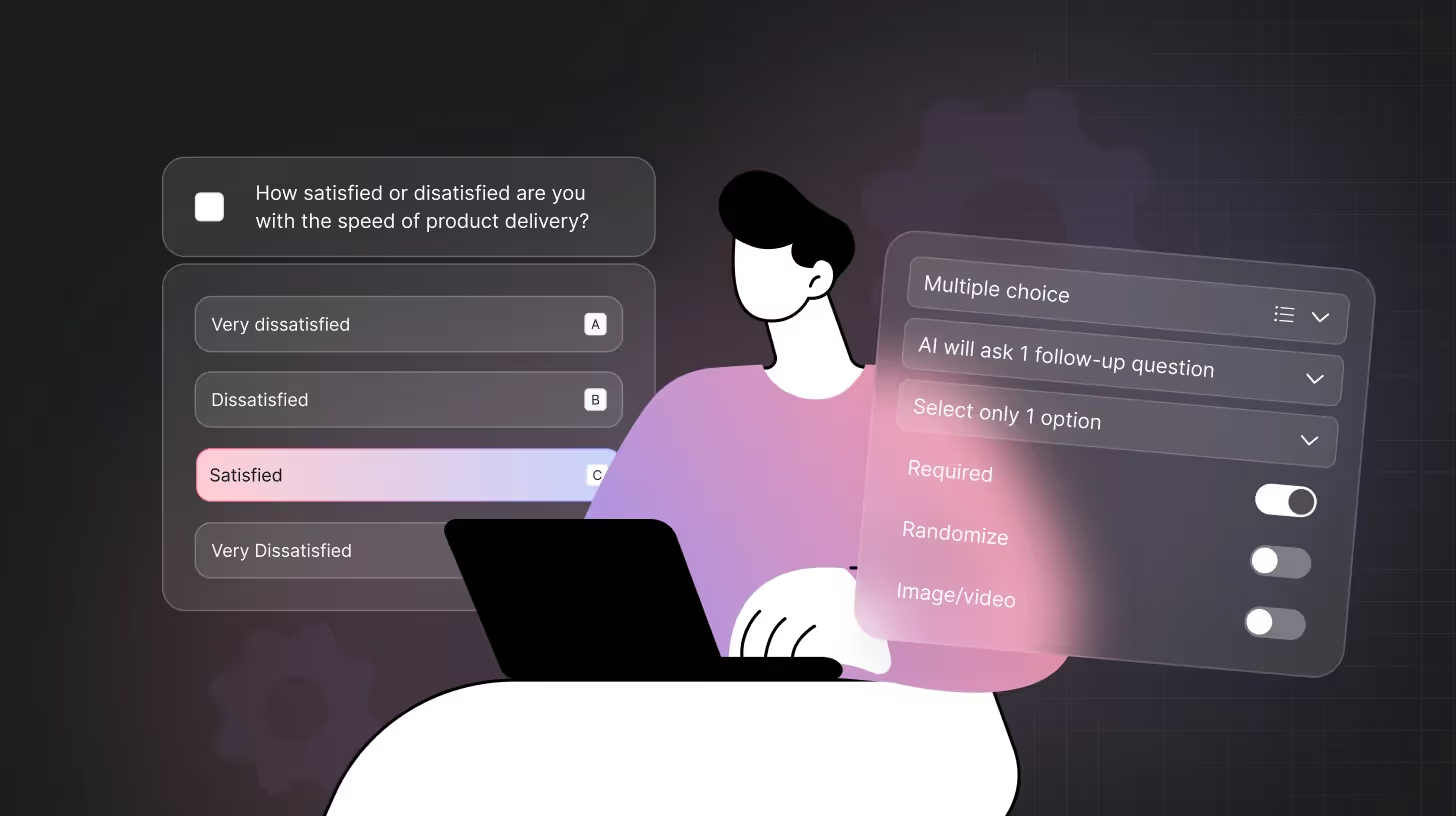

How AI Changes Students Perception Surveys

Smart schools are switching to AI-powered feedback systems that actually listen. Instead of static questions, students have real conversations with AI that digs deeper into their responses.

Imagine a student perception survey that schools could deploy where AI asks follow-up questions like:

- "You mentioned feeling disconnected in class. Can you tell me more about that?"

- "What would make group projects more engaging for you?"

- "How could your teacher explain concepts differently?"

This approach uncovers the "why" behind student responses. Raw data transforms into actionable insights that drive real change.

Setting Up Your Students Perception Survey Strategy

First things first: ditch the assumption that all feedback tools work the same way. They don't.

Start with clear goals. Are you trying to improve classroom environment? Boost academic engagement? Figure out why students seem disengaged during certain subjects?

Your survey design should match those goals. Generic questions produce generic answers. Specific, conversation-style prompts get you gold.

Here's what actually works when setting up your feedback system:

- Define specific outcomes - Don't just ask "how satisfied are you?" Instead, target exact issues like "what makes it hard to participate in group discussions?"

- Choose the right timing - Sending surveys during finals week kills response rates. Deploy them mid-semester when students can actually reflect on their experiences

- Pick your audience carefully - First-year students need different questions than seniors. Tailor your approach to each group's unique perspective

- Set response expectations - Tell students upfront how long the survey takes and what you'll do with their feedback. Transparency builds trust

- Plan your follow-up - Have a system ready to act on feedback quickly. Students notice when their input gets ignored

Strategic timing makes all the difference. Deploy surveys when students have mental space to give thoughtful responses, not when they're cramming for exams or stressed about deadlines.

Questions That Get Useful Answers

Skip the "rate your satisfaction from 1-10" nonsense. Student attitudes are more complex than simple rating scales can capture.

Try questions like:

- "Describe a moment when you felt really engaged in this class"

- "What's the biggest barrier to your learning right now?"

- "If you could change one thing about how this subject gets taught, what would it be?"

Notice how these open up conversations instead of shutting them down? That's the difference between data that sits in a spreadsheet and insights that drive improvement.

Making Sense of What Students Actually Tell You

Here's where most schools mess up: they collect feedback, then let it gather digital dust.

Learning experience feedback requires careful analysis to translate raw survey responses into action items. When students say "the teacher goes too fast," that could mean different things. Maybe the pace needs adjusting. Or perhaps concepts need better explanation. Could be that visual learners need different materials.

AI analysis helps decode these patterns. Instead of spending hours reading through hundreds of responses, you get clear themes and suggested next steps.

Common Mistakes That Kill Survey Effectiveness

Survey fatigue is real. Sending too many questionnaires trains students to ignore them. Schools that blast out weekly surveys wonder why response rates crater after the first month.

Another killer: asking for feedback but never showing what changed as a result. Students lose trust fast when their time gets wasted. They start thinking surveys are just administrative theater with boxes to check rather than tools for improvement.

Technical issues matter too. If your survey platform crashes or takes forever to load, students won't complete it. Mobile-friendly design isn't optional anymore. Students expect surveys to work seamlessly on their phones during breaks between classes.

Timing mistakes hurt participation rates. Launching surveys during finals week or right before spring break guarantees poor response rates. Students have bigger priorities than sharing their opinions when they're stressed about exams.

The AI Advantage in Modern Feedback Collection

Traditional surveys are like taking photographs. AI-powered systems are like having ongoing conversations.

When students interact with AI during surveys, several things happen:

- Responses become more detailed and honest

- Follow-up questions reveal deeper insights

- Natural language processing identifies sentiment and themes

- Predictive analysis helps prevent problems before they escalate

This isn't just theoretical. Schools using AI feedback tools report higher response rates and more actionable insights.

Turning Survey Data Into Real Change

Data without action is just expensive digital storage. The magic happens when survey insights drive specific improvements.

Successful schools create feedback loops that connect student input directly to policy changes, teaching adjustments, and resource allocation decisions. Students see their voices making a difference.

This creates a positive cycle. Better experiences lead to higher student satisfaction and more honest feedback. More honest feedback drives better decisions. Better decisions improve experiences.

Read - Student Satisfaction Survey Guide: Questions, Tips, and Free Template

The Power of AI Feedback for Educators

Modern AI survey platforms transform how educational institutions understand and respond to student needs. Instead of guessing what students think, schools get direct access to authentic, detailed feedback that guides meaningful improvement.

TheySaid makes this transformation possible.

Our AI-powered approach helps schools move beyond basic questionnaires toward genuine dialogue with students. When institutions embrace this shift, everyone benefits. Students feel heard, teachers get useful guidance, and administrators make data-driven decisions that actually work.

Sign up and try TheySaid today!

Key Takeaways

- Start conversations, not interrogations - Replace rigid survey questions with AI-powered dialogue that encourages detailed student responses

- Act on feedback quickly - Show students their input matters by implementing visible changes based on their suggestions

- Focus on specific improvements - Use targeted questions to address particular classroom challenges rather than generic satisfaction ratings

- Create ongoing feedback loops - Regular collection and response cycles build trust and continuous improvement culture

- Leverage AI for deeper insights - Modern survey tools reveal patterns and themes that manual analysis might miss

The future of education improvement starts with actually listening to the people you're trying to serve. Students perception survey data holds the keys to creating learning environments where everyone thrives. Schools that master this approach will leave their competition wondering what happened.

Frequently Asked Questions

Q: How often should schools conduct a students perception survey?

A: Most effective programs collect feedback 2-3 times per semester, timed around mid-term and end-of-course periods to capture evolving student perspectives without creating survey fatigue.

Q: What's the difference between traditional surveys and AI-powered feedback collection?

A: AI surveys engage students in conversations rather than just asking them to rate experiences, leading to deeper insights and higher response rates through natural dialogue.

Q: How can teachers overcome resistance to student feedback?

A: Focus on framing feedback as professional development opportunities rather than performance evaluation, and always share how specific suggestions led to classroom improvements.

Q: What response rate should schools expect from student perception surveys?

A: Well-designed AI surveys typically achieve 60-80% response rates compared to 20-40% for traditional questionnaires, due to their engaging, conversational format.

Q: How quickly can schools see improvements after implementing better feedback systems?

A: Most institutions notice increased student engagement within one semester, with measurable academic and satisfaction improvements appearing within a full academic year.

.avif)

.svg)