Tips & Tricks for Gathering Better Feedback with AI

Most feedback tools give you data. TheySaid gives you conversations.

The difference? Data tells you what happened. Conversations tell you why, and that's where the real fixes live. Whether you're trying to cut churn, speed up onboarding, or figure out why features flop, open-ended feedback with smart AI follow-ups gets you closer to the truth than any 5-star rating ever could.

This guide walks through nine strategies that turn generic surveys into insight engines. You'll learn how to set up questions that actually invite honest answers, train AI to sound like your brand, and push findings straight into Slack or your CRM so they don't rot in a dashboard. We'll also cover voice mode, conditional logic, and a plug-and-play recipe you can launch today.

By the end, you'll know how to collect feedback that's rich enough to act on and automate enough of the grunt work that you'll actually want to read it.

Related: How to close the feedback loop

What Is AI-Powered Feedback Collection?

AI-powered feedback collection uses large language models to run dynamic, two-way conversations with your users. Instead of static surveys that ask the same five questions to everyone, the AI listens to each response and asks clarifying follow-ups in real time.

Think of it like a user interview that scales. You write the opening prompt. The AI takes over from there, probing when someone mentions a pain point, asking for examples when an answer is vague, and skipping irrelevant questions when it's clear they don't apply. The result is qualitative data that feels personal but doesn't require you to sit through 200 Zoom calls.

TheySaid is built specifically for this. You define the goals, upload context about your product, and let the platform handle the back-and-forth. Every response gets transcribed, tagged by theme, and summarized so you can spot patterns without reading every word.

Common Use Cases for AI Feedback Tools

Post-purchase check-ins

Send a conversational survey 48 hours after someone buys. Ask what almost stopped them, what sold them, and whether the setup met expectations. Use answers to tweak onboarding emails and product messaging.

Churn interviews at scale

When someone cancels, trigger a voice-enabled exit interview. Ask what broke, what they tried instead, and what would've kept them. Route "billing confusion" themes to finance; "missing features" to product.

Feature validation before you build

Show a prototype or describe a concept, then ask users to walk through their reaction. AI follow-ups probe edge cases and surface objections you didn't think to ask about.

Onboarding pulse checks

After a user hits a milestone (first project, first invite sent), ask how it went. If they mention friction, the AI digs into specifics. If they breeze through, it skips the extra questions.

In-app feedback widgets

Drop a chat bubble on high-friction pages (checkout, account settings, integrations). Users can leave quick voice or text feedback. AI tags and escalates urgent issues in real time.

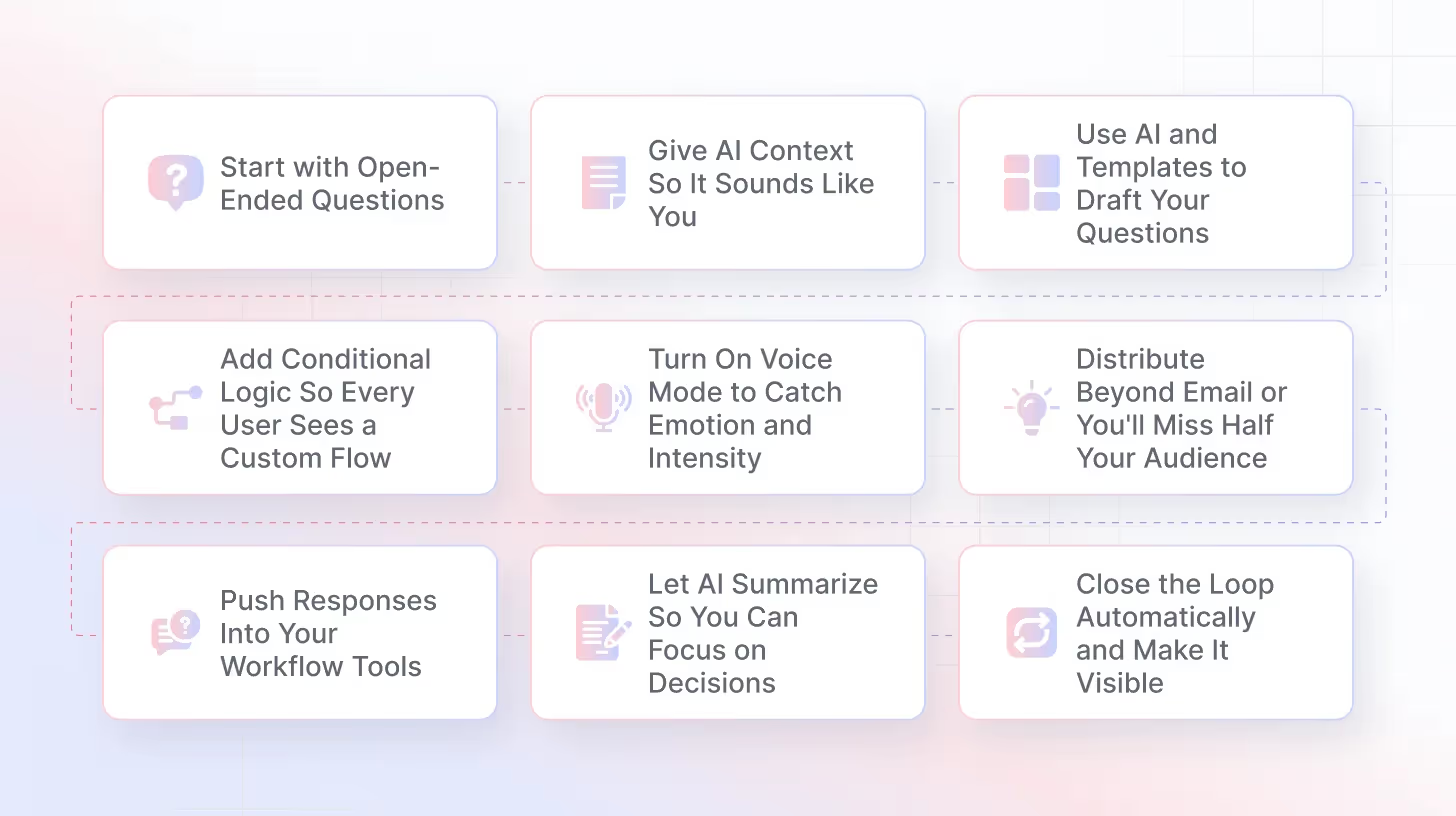

How to Build Better Feedback Flows in 9 Steps

Step 1: Start with Open-Ended Questions

Rating scales and multiple-choice feel efficient. They're not. They box people into your assumptions and miss the stuff you didn't know to ask about.

Open-ended prompts like "Tell me about…" or "Walk me through…" let users explain in their own words. That gives AI room to probe the parts that matter. Does someone say onboarding "took forever"? The AI asks what step slowed them down. Does someone mention a workaround? It asks why the default flow didn't work.

How to set it up in TheySaid:

Go to Projects → Questions. Write your main prompt as a text field, not a dropdown. Keep it short: "What made signup hard?" or "Walk me through your first week using [product]."

Add one or two targeted follow-up prompts manually if you want to steer the conversation, like "What made that difficult?" Then toggle on AI follow-ups so the system can ask its own clarifying questions based on the response.

Keep one guardrail question at the end for prioritization: "If we could fix one thing next, what should it be?" That gives you a clear signal even if the rest of the conversation meanders.

Step 2: Give AI Context So It Sounds Like You

Generic AI sounds like generic AI. If you want conversations that feel on-brand and ask relevant follow-ups, you need to feed the model information about your product, audience, and tone.

TheySaid lets you upload docs, link to help centers, and add a plain-text brief so the AI knows what to reference. That means when someone mentions "the dashboard," the AI knows which dashboard. When someone says "pricing felt confusing," it can ask about the specific plan tiers you offer.

How to do it:

Open your project and click the Teach AI panel. Upload PDFs (product docs, onboarding guides, release notes), paste URLs (pricing page, support articles), or drop in a short brief.

In the brief, include:

- Who you're talking to (new users, churned accounts, enterprise trials)

- Tone (friendly and casual, or more formal)

- Topics to avoid (don't ask about competitors, don't probe pricing if it's sensitive)

- Known hypotheses ("We think onboarding breaks at the API setup step")

Toggle "Use context in follow-ups" so probes can cite your specifics. The AI will sound less like a chatbot and more like someone who actually knows your product.

Step 3: Use AI and Templates to Draft Your Questions

You don't need to write everything from scratch. TheySaid has templates for common scenarios like post-churn interviews, onboarding pulses, and feature feedback, plus an AI builder that drafts questions based on your goal.

Start by describing what you're trying to learn. The AI proposes 6–10 questions with branching logic. You prune it down to the 5–7 essentials, tweak the wording, and you're live.

How to do it:

Go to + Add Project -> Template. Describe your goal in a sentence: "I want to understand why new Pro users take longer than 15 minutes to finish onboarding."

The AI suggests questions. Review them. Cut anything redundant or too vague. Reorder so the most important prompt comes first. Save the result as a template if your team will run similar projects later.

This cuts setup time from an hour to ten minutes and reduces blind spots. You'll catch angles you wouldn't have thought to ask about.

Step 4: Add Conditional Logic So Every User Sees a Custom Flow

Not every question applies to every user. If someone's a free-plan user, don't ask about enterprise features. If they rated something highly, skip the "what went wrong" follow-up.

Conditional logic routes people through different paths based on their answers. That keeps surveys short and relevant, which boosts completion rates and gives you denser signal.

How to set it up:

In the Questions panel, click Branch. Set rules based on how people respond.

Example flow:

Question: "Overall, how much value do you receive from our product?" (Rating 1–5)

- If user rates 5 → ask them to write a Google Review

- If user rates 4 → ask if they want training to get more value from the product

- If user rates 1–3 → ask if they would like someone to contact them to fix their issues

You can branch by persona, plan tier, feature usage, sentiment, or any custom property you pass in via your CRM or CDP.

Add a catch-all question at the end: "Anything else we should know?" That gives people a way to share something you didn't anticipate.

Step 5: Turn On Voice Mode to Catch Emotion and Intensity

Text is great for clarity. Voice is better for sentiment. You hear hesitation, frustration, excitement...all the stuff that gets flattened when someone types. Plus, voice is faster. Users can talk while they're doing something else.

TheySaid transcribes and analyzes voice responses just like text. You'll see sentiment markers, intensity flags, and exact quotes you can clip for stakeholder decks.

How to enable it:

Go to Project Settings and toggle Voice Mode on.

Write at least one prompt optimized for voice. Instead of "Rate your onboarding experience from 1–10," try "Tell me in your own words, what felt rough about setup?"

When you review responses, the AI analyzes sentiment strength automatically and flags what needs attention. If someone sounds frustrated but uses polite language, the system marks it. If they're enthusiastic, it highlights that as validation.

The AI summarizes key themes and surfaces notable quotes. You can clip these and share them with your team. Hearing a user say "I almost quit because I couldn't find the import button" hits harder than reading it in a spreadsheet.

Step 6: Distribute Beyond Email or You'll Miss Half Your Audience

Email surveys work for some people. Not everyone. If you only send feedback requests via email, you're ignoring users who check their inbox once a week or treat your messages like spam.

TheySaid supports multiple channels so you can meet people where they are. Response rates jump when you give users more ways to reply.

How to distribute:

Go to Distribution and pick from:

- Shareable Link – Drop it in social posts, in-app banners, or support emails

- Website Pop-Up Chat – Trigger a feedback prompt on specific pages (pricing, checkout, account settings)

- QR Code – Print it on packaging, event booths, or retail counters for in-person feedback

- Panel – Tap TheySaid's network to reach net-new respondents when your own list is tapped out

- Email Embed – Send surveys inside lifecycle emails or CS check-ins

Track response rates by channel. If your pop-up converts at 8% and email at 2%, shift more traffic to the pop-up. If QR codes at events pull double-digit response rates, print more codes.

Step 7: Push Responses Into Your Workflow Tools

Insights don't help if they sit in a dashboard. The goal is to route feedback to the people who can act on it (support, product, engineering) so fixes happen fast.

TheySaid integrates with the tools your team already uses. When a critical theme appears or someone mentions a blocker, the platform can auto-create a task, ping a Slack channel, or attach the feedback to a CRM record.

How to set it up:

Go to the left navigation and then Settings → Integrations:

- Slack – Send alerts to #customer-voice with the top quote and theme summary

- Salesforce / HubSpot – Attach insights to account records; auto-create tasks when themes hit a threshold

- Jira / Linear – Open tickets when the severity or mention count crosses a line you define

Set rules so the right signal goes to the right place. For example:

- Theme = "Billing confusion" AND Severity = High → create Jira ticket + Slack DM to finance lead

- Sentiment = Very Negative AND Plan = Enterprise → alert CS manager

This cuts reaction time from days to hours. Your team sees the feedback when it's still fresh, and users notice when you fix things fast.

Step 8: Let AI Summarize So You Can Focus on Decisions

Reading 200 open-ended responses takes hours. Tagging them manually takes longer. AI does it in seconds and finds patterns you'd miss.

TheySaid's Insights Dashboard auto-tags responses by theme, tracks sentiment over time, and surfaces the quotes that best represent each category. Click any theme and you'll get an AI-generated summary of what's driving it plus suggested next steps.

How to use it:

Open the Insights Dashboard. You'll see:

- Top themes ranked by frequency and severity

- Sentiment trendlines (are people getting happier or more frustrated?)

- Verbatim highlights for each theme

Click a theme (say, "Onboarding confusion") and hit AI Summary. You'll get a breakdown: what's causing it, which user segments mention it most, and 2–3 recommended actions.

Export a one-pager with top themes, supporting quotes, and priority actions. Use it for exec share-outs, roadmap planning, or sprint kick-offs. The format is consistent, so stakeholders know what they're looking at and can make calls fast.

Step 9: Close the Loop Automatically and Make It Visible

People stop giving feedback when they think it disappears into a void. If you close the loop ("Hey, we fixed that thing you mentioned"), they're more likely to respond next time. They also stay loyal longer.

TheySaid makes loop-closing automatic. Set up action items with owners and due dates. When the fix ships, send a follow-up message or email that includes the user's original quote. That shows you listened.

How to set it up:

Create an Action Item template with fields for owner, due date, estimated impact, and a link back to the verbatims.

Use Rules to auto-create tasks:

- When Theme = "Billing confusion" AND Severity = High → create task + assign to billing lead + Slack DM

Once the task is marked complete, trigger a follow-up message.

Example: "We heard you say setup was confusing because the API key step wasn't clear. We just added inline help text and a video walkthrough. Give it another shot and let us know if it's better."

Link back to their original feedback so they see you didn't just send a generic update. This turns one-time respondents into repeat contributors.

Quick "First Project" Recipe

Here's a plug-and-play setup you can launch today.

Goal: Understand why onboarding takes longer than 15 minutes for new Pro accounts.

Inputs:

- Upload your onboarding doc and recent changelog

- Add a brief: audience = new Pro users; tone = friendly and casual; avoid asking about pricing

Draft:

- Go to Projects → New → Use AI

- Select the Onboarding Interview template

- AI suggests 8 questions; trim to 5

Voice Mode: Turn it on. Keep questions short and conversational.

Distribute:

- Email embed for new Pro users (trigger 24 hours after signup)

- Website pop-up on the "Setup Complete" page

Integrations:

- Slack alerts for "authentication issues" theme

- Jira ticket when severity ≥ High

Insights:

- Review theme trends after 50 responses

- Export AI summary for your next product meeting

Act:

- Assign fixes to owners

- Set auto follow-up to notify users when resolved

Iterate:

- Remove any question with >20% drop-off

- Add one new probe based on AI-suggested gaps

You'll have usable insights in a week and a repeatable process your team can run every quarter.

Key Takeaways

- Open-ended questions pull a richer context and let AI follow up dynamically

- Upload product docs and briefs so AI asks relevant, on-brand questions

- Use templates and AI drafting to launch fast without blind spots

- Conditional logic keeps surveys short and boosts completion rates

- Voice mode captures sentiment and emotion text can't convey

- Distribute across multiple channels to reach more users where they are

- Integrate with Slack, CRMs, and project tools so feedback drives action

- Let AI summarize themes and suggest next steps to save hours of manual work

- Close the loop with automatic follow-ups so users know you listened

FAQs

What's the difference between AI follow-ups and branching logic?

Branching logic routes users based on predefined rules you set (if NPS ≤ 6, show this question). AI follow-ups are dynamic. The model asks clarifying questions based on what someone just said, even if you didn't anticipate that response.

Can I use TheySaid if I don't have a huge user base?

Yes. You can use the Panel feature to reach net-new respondents outside your own list. You can also run smaller, more frequent pulses instead of waiting for thousands of responses.

How does voice mode affect response rates?

Voice typically boosts completion by 15–30% because it's faster and more convenient. Users can respond while multitasking. Transcription quality is high, and sentiment analysis works just as well as it does with text.

What happens to responses after I close a project?

They stay in your account. You can export raw data, revisit insights, or reopen the project if you want to collect more feedback later. Nothing gets deleted unless you manually remove it.

Can I customize the AI's tone or language?

Yes. In the Context panel, add tone guidelines (casual, formal, empathetic) and examples of how you'd phrase follow-ups. The AI adapts its style to match. You can also review and edit suggested follow-up questions before they go live.

How do I know when to act on a theme?

TheySaid shows frequency and severity scores. If a theme appears in 20% of responses and has high sentiment intensity, prioritize it. You can also set thresholds like "alert me when 'billing confusion' is mentioned by 10+ users in a week."

.svg)