Leading and Loaded Questions: The Survey Killers (And How to Fix Them)

Want better data? Stop asking questions that push users toward specific answers. Leading and loaded questions wreck your surveys without you knowing it.

Survey results only help when they reflect reality. Bad questions get bad data. Bad data leads to bad business decisions.

Let's fix this.

What Makes Questions "Leading" or "Loaded"?

Your brain holds opinions. These slip into your questions. Users pick up these cues and respond how they think you want—not with their true thoughts.

Two types of questions cause this problem:

Leading questions push respondents toward a specific answer through their structure and wording. They hint at what answer you want.

Loaded questions sneak assumptions into the question itself, forcing respondents to accept premises they might not agree with.

Both skew your data and waste your time.

The Difference: Leading vs. Loaded Questions

Though often confused, leading and loaded questions manipulate in distinct ways:

Leading questions steer respondents toward a specific answer by how they're phrased. They subtly indicate what response you want.

Example: "How satisfied were you with our excellent customer service?"

The problem: The word "excellent" signals you expect a positive rating.

Loaded questions contain built-in assumptions that trap respondents. No matter how they answer, they accept premises that may be false for them.

Example: "Where do you typically use our mobile app?"

The problem: This assumes they use your app at all, when they might not.

Think of it this way: Leading questions push for a certain answer, while loaded questions force acceptance of hidden assumptions. Leading questions guide the destination; loaded questions pack hidden baggage.

The most dangerous survey questions often combine both tactics—they contain assumptions AND push for specific answers.

Leading Questions: The Subtle Manipulators

As mentioned, leading questions guide users down a predetermined path. They use subtle (or not-so-subtle) cues that signal the "right" answer.

Types of Leading Questions

- Assumption-based questions: These presuppose something about the respondent: "How much did you enjoy our product?" (assumes they enjoyed it)

- Scale-based questions with bias: These offer unbalanced rating options: "How satisfied were you with our service?"

- Extremely satisfied

- Very satisfied

- Satisfied

- Somewhat satisfied

- Not satisfied

(Four positive options, one negative)

- Questions with interconnected statements: These plant an idea before asking the question: "Most users love our mobile app. What do you think about it?"

- Direct implication questions: These set up future behavior assumptions: "When you recommend our product to friends, what features will you highlight?"

- Coercive questions: These practically force agreement: "Our customer support team met your needs, didn't they?"

Loaded Questions: The Assumption Traps

Loaded questions build assumptions into their structure. No matter how someone answers, they validate premises they might not agree with.

Examples of Loaded Questions

"Where do you prefer using our app?" (assumes they use it)

"How has our new feature improved your workflow?" (assumes improvement)

"Which aspect of our service do you find most valuable?" (assumes they find value)

"Have you stopped ignoring our emails?" (assumes past behavior)

Why These Questions Kill Your Data

When you use leading or loaded questions:

- You get what you want to hear - not what you need to hear

- You miss critical insights about problems or opportunities

- Your data becomes useless for making solid business decisions

- You waste time and resources collecting meaningless feedback

- You miss growth opportunities hidden in honest feedback

How to Avoid Asking Leading and Loaded Questions?

Creating better questions isn't just about avoiding mistakes—it's about crafting prompts that unlock honest, valuable feedback. This four-step process helps you transform biased questions into powerful data-collection tools.

Step 1: Hunt for Assumptions

Assumptions hide in questions like splinters—small but painful. Every survey creator brings their own perspective, which can sneak into question wording.

Review each question through a critical lens:

- Identify hidden premises: What facts does your question take for granted? For example, "How often do you use our mobile app?" assumes the respondent uses your app at all.

- Check for presumed behaviors: Does your question assume specific actions or habits? "Where do you typically read our newsletter?" assumes they read it.

- Look for emotional assumptions: Does your question presume feelings? "What do you love most about our service?" assumes positive emotions.

- Test with diverse audiences: Ask people with different perspectives to review your questions. Someone unfamiliar with your product might spot assumptions you've missed.

Try this exercise: For each question, add the phrase "if at all" or "if any" at the end. If it changes the meaning significantly, you've found an assumption.

Step 2: Strip Out Loaded Language

Words carry emotional baggage. When crafting survey questions, think like a journalist seeking facts, not a marketer selling benefits.

Here's how to neutralize your language:

- Replace subjective adjectives: Change "our user-friendly interface" to simply "our interface." Let respondents decide if it's user-friendly.

- Cut comparative terms: Remove words like "better," "improved," or "enhanced." Instead of "How has our improved checkout process affected your shopping?" ask "How has our current checkout process affected your shopping?"

- Watch for subtle signals: Even seemingly neutral terms can signal bias. "Do you agree that..." subtly pushes for agreement. Try "What is your opinion on..." instead.

- Beware of jargon: Internal company terms or industry jargon can confuse respondents and limit responses.

Red flag words to avoid:

- Superlatives: best, worst, greatest, most, least

- Value judgments: good, bad, helpful, useless

- Intensifiers: very, extremely, completely

- Minimizers: just, only, simply

- Assumptive qualifiers: still, already, yet

Step 3: Balance Your Options

Multiple-choice options create a frame of reference that influences how people think about your question. Balanced options yield balanced data.

Create fair choice sets with these techniques:

- Audit the emotional spectrum: Count your positive vs. negative options. Aim for symmetry with equal numbers on both sides of neutral.

- Use consistent scaling: When creating Likert scales, ensure the distance between options feels even. The gap between "Somewhat satisfied" and "Very satisfied" should feel similar to the gap between "Somewhat dissatisfied" and "Very dissatisfied."

- Consider cultural context: Response patterns vary across cultures. Some cultures avoid extreme responses, while others gravitate toward them. For international surveys, account for these differences.

- Include meaningful escape options: Don't force responses when they don't apply. Options like "Not applicable," "I prefer not to answer," or "I don't know" provide valid data points rather than forcing inaccurate selections.

- Test with real users: Before launching your survey, test response options with a small group. Ask what's missing and what feels unclear.

Example of balanced options:

Instead of:

- Extremely satisfied

- Very satisfied

- Satisfied

- Somewhat dissatisfied

- Not satisfied

Consider:

- Very satisfied

- Somewhat satisfied

- Neither satisfied nor dissatisfied

- Somewhat dissatisfied

- Very dissatisfied

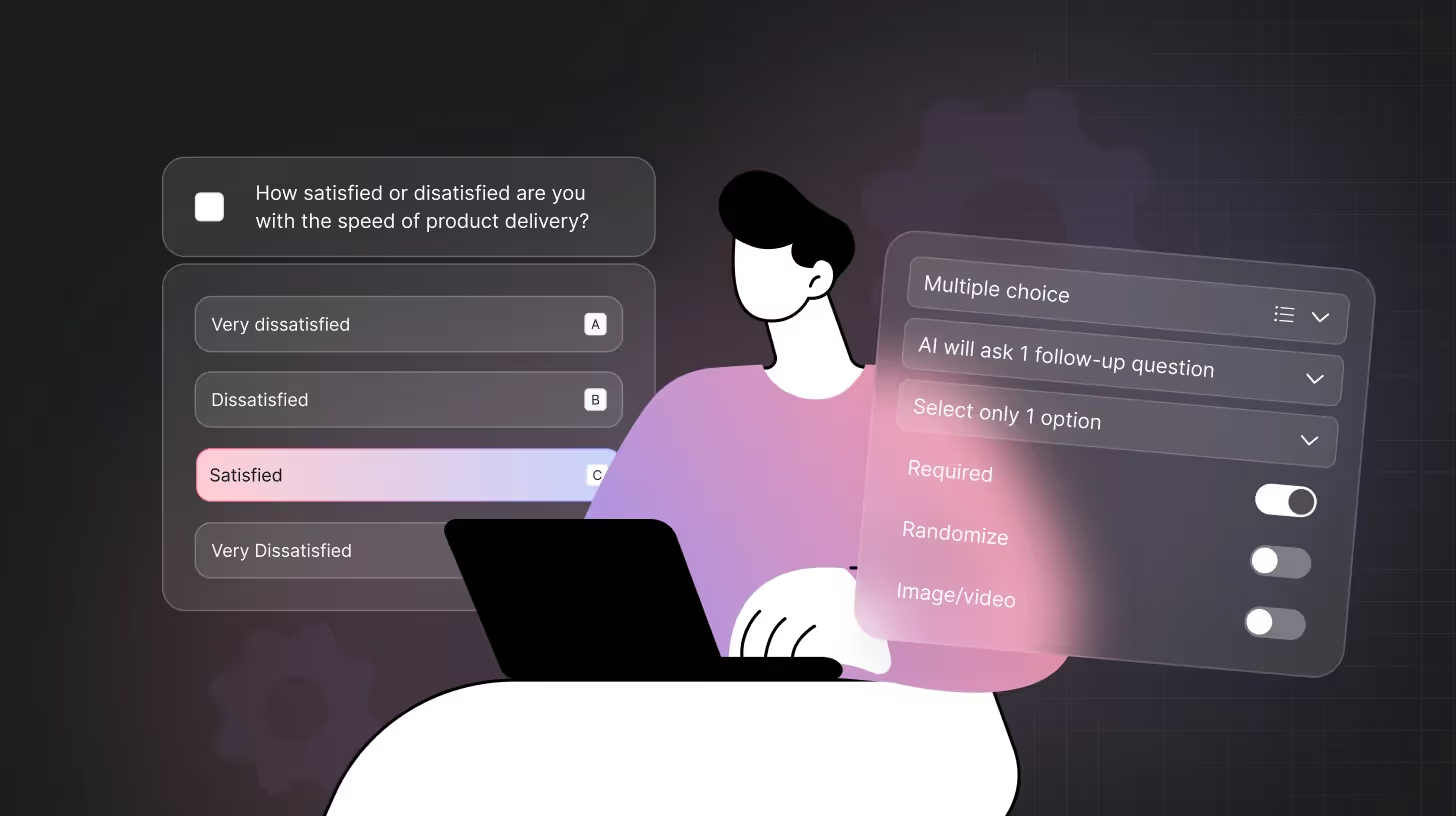

Step 4: Use Skip Logic Strategically

Skip logic (also called branching logic) creates personalized survey paths based on previous answers. This prevents respondents from facing questions that don't apply to them.

Master skip logic with these approaches:

- Start with screening questions: Before diving into details, confirm relevance. "Have you purchased from us in the last 6 months?" determines whether purchase experience questions apply.

- Create logical pathways: Map your survey as a decision tree, not a linear path. Different response branches should make sense based on previous answers.

- Use neutral language in screening questions: Even qualifying questions can contain bias. "Do you use our product?" is better than "How frequently do you use our product?"

- Leverage AI for dynamic surveys: TheySaid's AI can automatically adapt follow-up questions based on previous responses, creating conversation-like experiences that feel natural and gather deeper insights.

- Respect respondent time: Only ask relevant questions. If someone indicates they've never used a feature, don't ask five follow-up questions about that feature.

Advanced skip logic strategy: Create confidence-based pathways. If someone indicates low confidence in their knowledge of a topic, offer simplified questions. For high-confidence respondents, dig deeper with more detailed questions.

By applying these four steps to every question in your survey, you transform potentially misleading questions into clear windows into your respondents' true thoughts and experiences. The result? Data you can trust to make better business decisions.

Before/After Examples: Fix Your Questions

Leading Question: "How much did you enjoy our amazing product features?"

Fixed Version: "How would you rate our product features?"

Loaded Question: "Where do you use our app most often?"

Fixed Version: "Do you use our app? [Yes/No]" (If yes) "Where do you typically use it?"

Leading Question: "Don't you agree our customer support team responds quickly?"

Fixed Version: "How would you rate our customer support team's response time?"

Loaded Question: "What's your favorite part about our website?"

Fixed Version: "Do you have any aspects of our website that you particularly like? If so, what are they?"

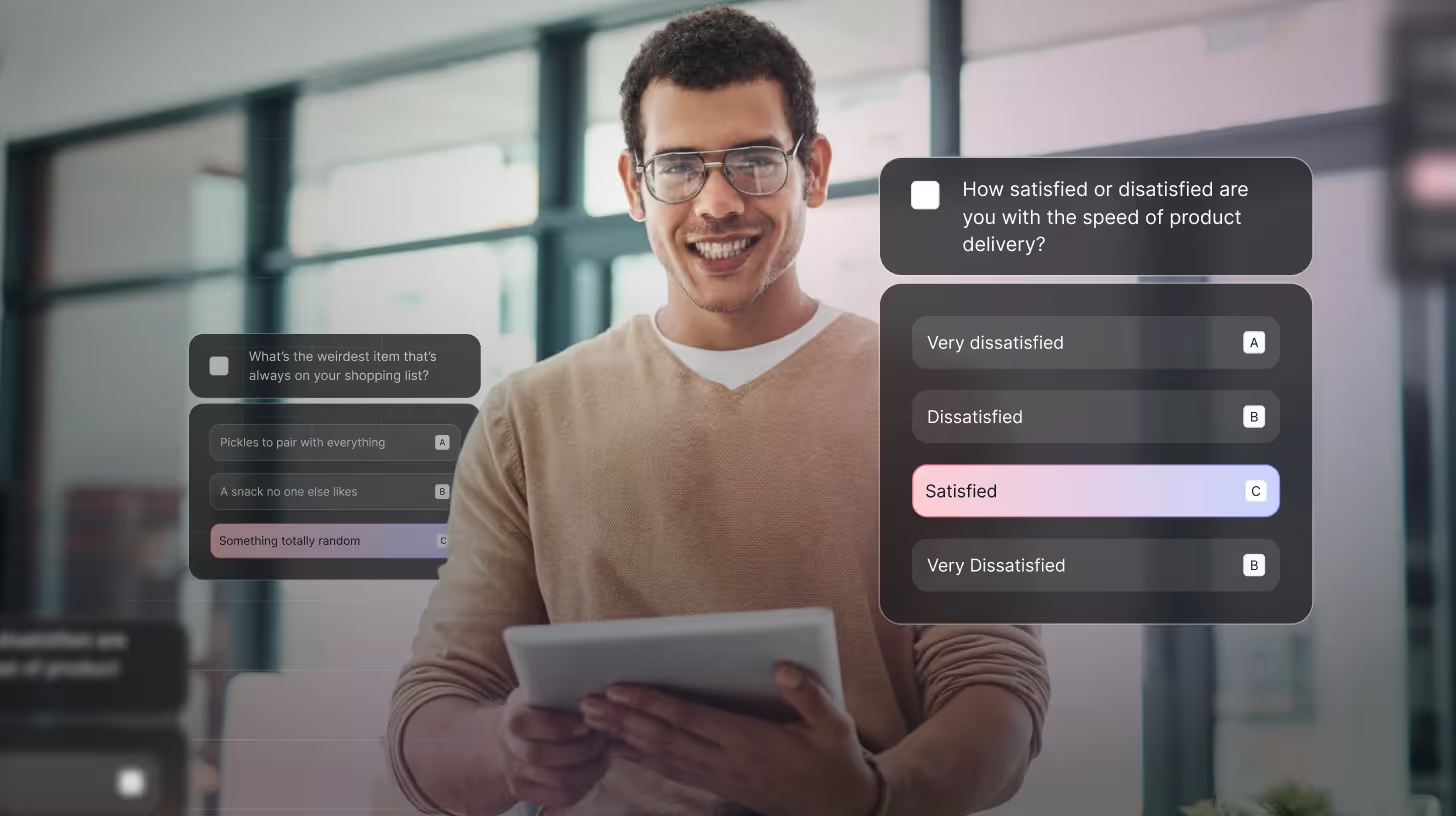

The AI Advantage: How TheySaid Makes This Easy

Traditional surveys lock you into static questions. TheySaid's AI helps you:

- Detect leading and loaded questions as you write them

- Generate neutral alternatives to biased questions

- Create dynamic conversation paths that adapt to user responses

- Follow up organically on surprising answers

- Analyze sentiment beyond the literal answers

AI conversation lets users express nuanced opinions impossible in traditional surveys. They talk naturally. Your data improves.

Start Creating Better Surveys Today

Bad questions waste time and money. They feed you false information that leads to bad decisions.

With TheySaid's AI survey platform, you can:

- Create bias-free questions

- Get deeper insights through conversation

- Understand not just what users think, but why

- Turn raw feedback into actionable insights

Stop guessing what your users think. Start asking questions that get real answers.

Try TheySaid now and see how much better your data can be.

.avif)

.svg)