User Testing Tools Create More Problems Than They Solve

Development teams need user feedback before launch but existing tools make testing harder than it should be. You have to watch hours of video recordings to extract key insights, feeling guilty about potentially missing important findings if you don't review every single session. Professional testing panels provide feedback from career testers who skew results with expertise your real customers don't have, while their limited size makes it hard to find samples matching your target audience. Even seasoned UX researchers spend hours setting up test plans and getting approvals before testing can begin. Your user test data sits isolated from surveys, interviews, and other feedback, preventing comprehensive analysis in one location. Meanwhile, clunky screen recorders require outdated software installations that frustrate real customers who abandon sessions before providing valuable input.

TheySaid eliminates user testing friction while amplifying insights.

Rather than struggling with complicated workflows and fragmented feedback, you get user tests that work seamlessly for real customers while automatically revealing key insights. This approach delivers authentic user behavior data while eliminating the complexity that slows down product teams.

TheySaid cuts through the noise.

Instead of relying on secondhand sales rep interpretations or generic "pricing concerns" explanations, you get direct access to prospect thinking through AI-moderated conversations that feel safe and honest—uncovering the specific obstacles that can be addressed to win deals back.

How TheySaid Enables Pre-Launch User Testing Success

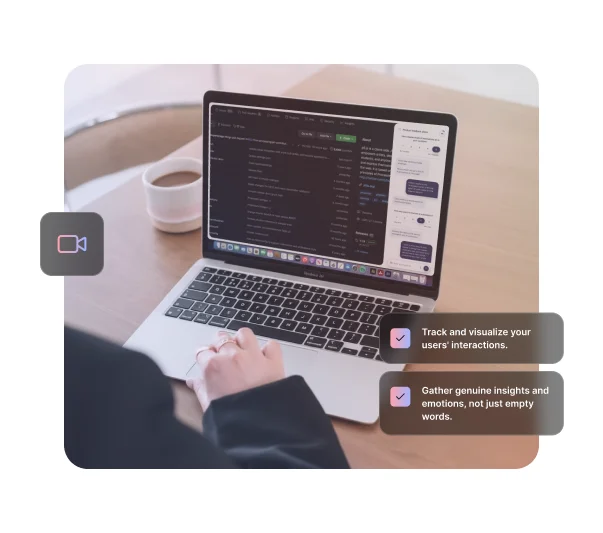

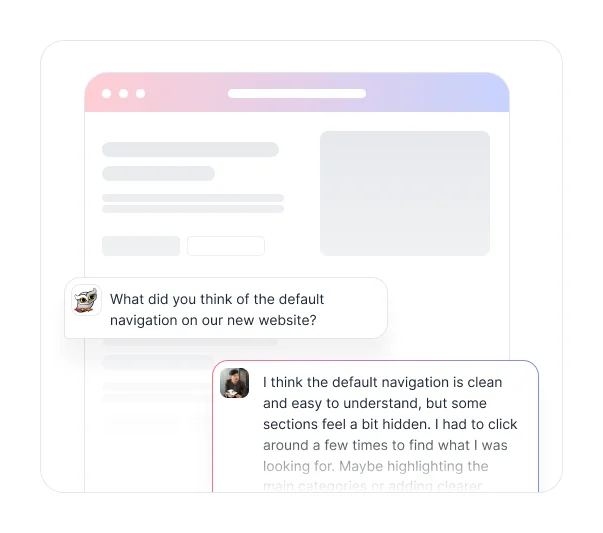

Capture Real User Behavior Through Screen Recording

Record actual user interactions with your prototypes, staging environments, or beta versions while they navigate through key workflows and features. For most sites, users don't even need to install a browser extension to participate.

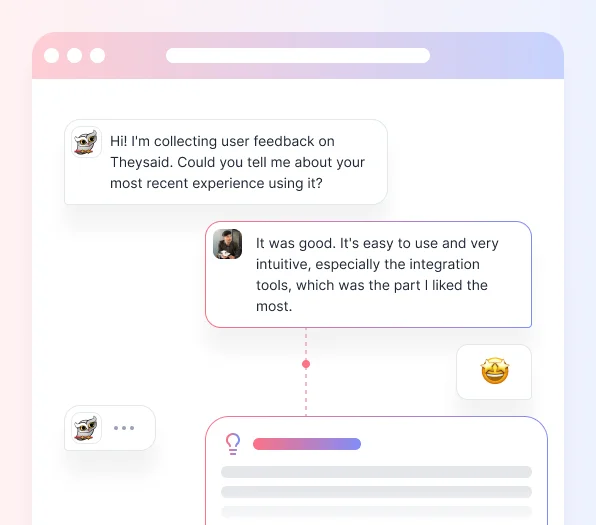

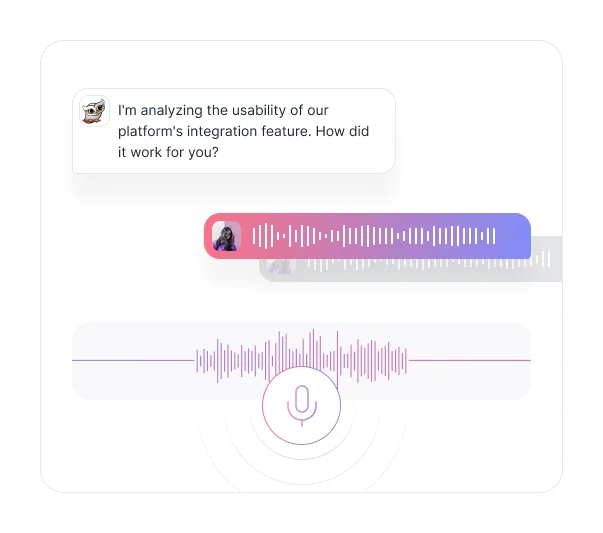

Understand User Thinking Through Voice Commentary

Gather spoken feedback as users work through tasks while AI guides them through the testing process, asking probing questions that reveal their thought processes, expectations, and reasoning behind their actions and reactions.

Identify Usability Issues Before They Impact Customers

Spot navigation problems, unclear interfaces, and workflow interruptions during the testing phase when fixes are still cost-effective to implement.

Validate Feature Adoption Through Observed Usage

Watch how users actually discover and use new features compared to how your team intended them to be used.

Let Patient Voices Drive Real Change

Stop guessing. Start acting on real insights from patients and staff, instantly and effortlessly.Discover how TheySaid can elevate your care experience.

Book a Live Demo

Advanced Pre-Launch Testing Platform

Real Customer Testing Through Multiple Channels

Recruit your actual customers rather than professional testers through email outreach, website pop-ups, social media sharing, and direct links, ensuring feedback comes from people who genuinely represent your target audience.

AI-Powered Session Analysis

Eliminate hours of manual video review through automated analysis that immediately identifies usability patterns, friction points, and critical issues across testing sessions, highlighting problems requiring attention while development resources are available.

Seamless Screen and Voice Recording

Enable effortless user participation through browser-based recording that requires no software installation or technical setup, capturing complete user testing sessions with both screen activity and voice commentary.

Video Playback with Question Timestamps

Review user testing sessions through organized video playback that jumps directly to specific questions or tasks, streamlining the analysis process for development teams without watching entire recordings.

Prototype and Live Product Testing

Test Figma prototypes, staging environments, or beta versions by providing URLs that users navigate while their interactions are recorded for comprehensive usability validation.

Before & After

Without TheySaid

Hours of Manual Video Analysis

Extracting key insights from user testing videos requires watching hours of recordings without missing important findings, making teams feel guilty about potentially overlooking critical usability issues buried in lengthy sessions.

Professional Tester Bias

User testing tools rely on small panels of professional testers who skew results with expertise that real customers don't have, making it difficult to find large samples matching actual target audience demographics.

Time-Consuming Test Setup

Even experienced UX researchers spend hours setting up test plans, configuring scenarios, and getting approvals before testing can begin, delaying critical validation when launch timelines are tight.

Fragmented Feedback Repository

User testing data sits isolated in separate dashboards from surveys, interviews, and other feedback, preventing comprehensive analysis and unified insights across all customer touchpoints.

Clunky Screen Recording Software

Traditional tools require installing outdated screen recorders that are hard to use and frequently cause real customers to abandon testing sessions before providing valuable feedback.

With TheySaid

Instant AI-Powered Insights

AI immediately extracts key findings from user testing sessions without requiring manual video review, highlighting critical usability patterns and issues as testing completes rather than after hours of analysis.

Real Customer Participation

Deploy user testing through multiple channels to recruit actual customers rather than professional testers, ensuring feedback comes from people who genuinely represent your target audience and usage patterns.

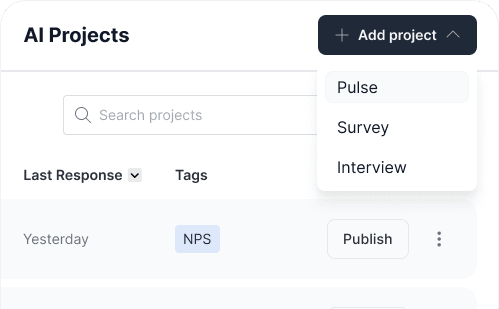

Rapid Test Deployment

Launch user testing projects in minutes using AI-generated scenarios and templates, eliminating lengthy setup processes and approval cycles that delay validation timelines.

Unified Feedback Platform

Centralize user testing results alongside surveys, interviews, polls, and other feedback in one platform for comprehensive analysis and cross-channel insights about customer experience.

Seamless Browser-Based Testing

Enable effortless participation through modern browser-based recording that requires no software installation, allowing real customers to complete testing sessions without technical barriers or frustration.

Don't Launch Blind - See How Users Really Experience Your Product

See what customers say about our AI survey & user testing platform

Turn Conversations Into Results

Learn how organizations across industries use TheySaid to recover pipeline, reduce churn, and build trust through authentic customer stories.

.svg)